Introduction

In the digital era, when people increasingly engage in virtual social life and numerous user-generated materials abound across various platforms, content moderation has become significant and critical to ensure the integrity and security of online environments. However, keeping increasingly crowded virtual spaces safe and respectful is challenging, requiring a new approach and advanced technologies to navigate large amounts of spreading data effectively. Incorporating artificial intelligence into the moderation initiative perfectly responds to the need for flexible and tailored methods that fit the changing digital landscape and diverse cultural, legal, and ethical factors.

Understanding AI Content Moderation

AI-driven moderation is a natural evolution of content management strategies amidst the technological revolution, as artificial intelligence and digitalisation constantly span various aspects of our lives on an unprecedented scale. This typically results in heightened efficiency, time savings, and cost-effectiveness, often accompanied by a distinctive capability to tackle challenges where traditional approaches might prove inadequate. In content moderation, artificial intelligence plays a crucial role in swiftly overseeing user-generated materials, which has recently reached gigantic proportions. Leveraging advanced algorithms, machine learning, and natural language processing, AI handles large volumes of data across various formats, including text, images, videos, and multimedia. It maintains a consistent and impartial approach to interpreting records, specialising in skillfully managing routine tasks like identifying spam, removing duplicates, and persistently enforcing community guidelines. However, the AI’s support extends beyond efficiency, delving into intricate aspects such as threat management, scalability, and real-time responsiveness.

Nevertheless, while AI content moderation is revolutionary and groundbreaking, it does not entirely replace human efforts. The personalised approach remains essential in instances requiring nuanced understanding, emotional intelligence, and addressing complex or context-specific content concerns. Working together in harmony, humans and technology can make a difference, ensuring that all online content adheres to established standards, enhances user experience, and fosters the desired atmosphere.

The Evolution of Content Moderation

As digital services adopt content moderation as a standard practice, its evolution into a dynamic necessity spans jurisdictions, reflecting the constantly shifting environment. It is well reflected in the case of social media, which has become an extensive information hub and has witnessed an exponential surge in users and user-generated materials. Below are a few selected statistics showcasing the growing popularity of social connections and the pervasive influence of crowd-sourced content:

As of 2024, 4.95 billion individuals use social media, constituting 61% of the world’s population.

(Source: DemandSage)

In 2022, the typical daily social media usage for global internet enthusiasts was 151 minutes, increasing from 147 minutes (about two and a half hours) in the previous year.

(Source: Statista)

In just one minute, 240,000 images are shared on Facebook, 65,000 images are posted on Instagram, and 575,000 tweets are sent on X.

(Source: HubSpot)

Therefore, different methods and technological advancements are constantly deployed to boost protection and stay ahead, with a noticeable shift from manual efforts to incorporating advanced concepts and solutions. Below is a comparison between the moderation approaches, key technologies, and challenges, providing a clear understanding of how the initiative changed over time:

A Decade Ago:

Content Volume: Comparatively smaller scale and diversity.

Types of content: Predominantly text-based materials, fewer images and videos.

User Engagement: High, but less widespread and constant than today.

Moderation Approach: Mostly manual and reactive, with human moderators playing a central role.

Key Technology: Basic automation tools, like keyword filters and simple algorithms.

Challenges: Time-consuming moderation process, susceptibility to mistakes and difficulty managing a growing content volume.

Nowadays:

Content Volume: Gigantic proportions, accompanied by an unparalleled volume of user-created material.

Types of Content: Diverse and multimedia-rich, including text, images, videos, podcasts, live streams, etc.

User Engagement: Constant and widespread, with billions of individuals actively contributing content.

Moderation Approach: Utilising knowledgeable and experienced moderators with cultural and linguistic skills specific to certain industries while integrating innovative tech solutions and diverse types of moderation. These include proactive, reactive, distributed or hybrid strategies.

Key Technology: AI, machine learning algorithms, natural language processing, and automation tools.

Challenges: Addressing specific context, cultural diversity, and language nuances while handling rapidly evolving online behaviours and mitigating emerging risks that increasingly appear online.

How AI Content Moderation Works

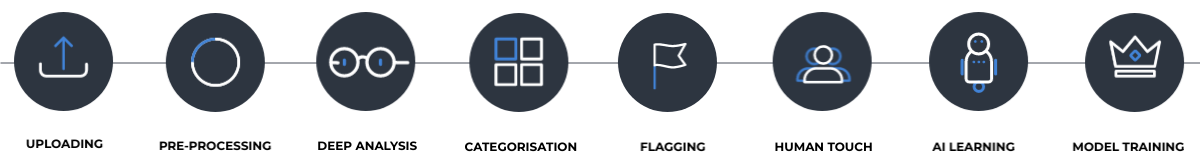

AI Content Moderation involves leveraging artificial intelligence algorithms and machine learning models to analyse user-generated content on digital platforms automatically. Significantly, the whole task can be further improved or refined by incorporating human involvement, especially when dealing with intricate information. The process typically involves several key steps:

- Uploading: AI moderation starts when people upload different forms of content to express their feelings, share opinions or contribute to online discussions.

- Pre-Processing: The content is frequently subjected to pre-processing, involving organisation and preparation for analysis. This step may include converting diverse formats into a standardised form to ensure uniform evaluation.

- Deep Analysis: Artificial intelligence helps evaluate shared materials using techniques such as, for example, natural language processing, computer vision, and other machine learning methods.

- Categorisation: The reviewed content is usually sorted into predefined categories, encompassing areas such as offensive language, violence, hate speech, copyright violation, unsolicited messages and others. Each category is usually associated with a score, indicating the probability of the content belonging to that specific classification.

- Flagging for Review: If the material is identified as potentially harmful, it is flagged for more conscious review, and such information is escalated to human moderators for a detailed and context-sensitive assessment.

- Human Touch: Moderators, equipped with real-time information, carefully inspect highlighted data, considering context and nuances before deciding whether it should be published or removed.

- AI Algorithm Learning: Following the personal review, the AI algorithms utilise feedback from moderators to enhance their future accuracy and effectiveness. This involves learning from mistakes and successes and continuously improving the system’s performance.

- Training the Model: The AI model usually undergoes training on a varied dataset containing acceptable and objectionable instances. In the process, it acquires knowledge of patterns and distinguishing features among different content types. Annotation significantly contributes to bridging the gap between raw data and the efficient training of machine learning models, enabling them to discern patterns and make informed predictions.

AI Content Moderation Techniques

The spectrum of AI content moderation techniques extends from conventional methods like keyword filtering through real-time analysis to cutting-edge technologies such as image or voice recognition. Each one plays a crucial role in identifying and addressing potentially harmful information, undergoing a transformative journey, considering the AI’s capability to learn and develop, as well as the diverse array of content types available. These include techniques like:

- Keyword Filtering: This method generates a list of predefined keywords or phrases linked to inappropriate or violating content, followed by precisely scanning their online presence.

- Image and Video Recognition: This entails carefully analysing pictures and videos to identify elements that may breach community guidelines, such as explicit messages or violence, allowing quick detection and moderation.

- Text Sentiment Analysis: This approach allows for text sentiment evaluation while identifying positive, negative, or neutral tones, aiming to recognise offensive language, hate speech, or other inappropriate expressions.

- Voice Recordings Assessment: This method involves voice recognition technology and natural language processing to understand the spoken words and context, selecting specific elements, such as explicit content or offensive speech.

- Contextual Analysis: This enables AI to make more nuanced decisions by considering the context surrounding content.

- User Behavior Analysis: The task aids in detecting patterns associated with spam, bots, or coordinated efforts to spread harmful content based on abnormal or suspicious activity identification.

Advantages of Using AI for Moderation

The benefits of employing AI in content moderation are multifaceted. Firstly, AI enables complete automation of moderation, enhancing the speed and precision of handling routine tasks. This not only saves time but also ensures a consistently reliable approach to addressing common issues. One pivotal advantage lies in AI’s ability to manage the ever-increasing volume of user-generated content effectively. AI quickly recognises, analyses, and accurately handles potential threats, providing human moderators with specific data that demands their expertise. This enhances overall efficiency and shields moderators from exposure to harmful material, reducing the need for time-consuming manual review.

Additionally, artificial intelligence excels in continuous large-scale moderation, maintaining precise vigilance over various elements simultaneously, which reduces the risk of bias or inconsistency in decisions influenced by personal beliefs or emotions. Moreover, AI acts as a game-changer by conducting real-time, effective analysis of published information, ensuring a safe user experience during live streams or on-the-fly interactions. Beyond its core functions, AI content moderation showcases proficiency in adapting to evolving patterns, handling emerging complexities, and proactively identifying and mitigating potential risks. These qualities collectively contribute to the initiative’s robust and comprehensive nature.

Challenges and Limitations of AI Content Moderation

Despite its numerous advantages, AI content moderation faces challenges and limitations that impact its effectiveness. Addressing these issues requires a holistic approach, combining technological advancements, ongoing research, and collaboration between AI developers, digital platforms, and regulatory bodies. The most significant challenge in AI-empowered moderation is a constraint in contextual understanding, often leading to misinterpretation of content and incorrect classification of records. This limitation can generate false positives, contributing to flagging content as harmful when it is not, or false negatives, missing genuinely harmful material, resulting in potential inaccuracies and difficulties in maintaining a precise moderation system. Furthermore, the efficiency of AI algorithms is closely tied to the quality of the data representation employed during training. It is imperative to integrate updates and improvements to the algorithms consistently. This ongoing process is essential to proactively address and adjust to the ever-changing landscape of tactics, trends, and challenges to maintain optimal performance. This effort requires a robust monitoring, analysis, and adaptation system, ensuring that the AI algorithms learn from historical data and stay responsive to emerging patterns and evolving content dynamics.

In addition, great concerns related to AI moderation are ethical and legal considerations. These refer to the dilemma of upholding user privacy and addressing questions about the responsible use of AI-powered tools to ensure fair and unbiased overviewing. On the legal front, meeting diverse global legal requirements for moderation is crucial, requiring ongoing attention and adaptation as regulations vary and evolve rapidly and constantly. What is also worth emphasising is the rise of generative AI, such as advanced language models, which create text that closely resembles human communication regarding structure, style, and coherence. This high level of similarity makes it challenging for traditional and even more advanced AI elements to differentiate between user-generated content and those produced by generative AI. Lastly, digital platforms using AI moderation may also face difficulties handling content across multiple languages due to varying nuances and cultural contexts and struggle with visual oversight, which needs sophisticated analysis that is not always foolproof.

The Future of AI Content Moderation

The future of AI content moderation will likely see continuous advancements and technological refinements to address current challenges and enhance overall effectiveness to a more considerable extent. Thus, AI algorithms will become more sophisticated in contextual understanding, reducing misinterpretation and accurately classifying diverse content forms. Technologies, including multimodal AI, reinforcement learning, collaborative filtering, and blockchain, are considered part of the future developments, advancing in greater comprehension, verification and authenticity check. Ongoing progress in natural language processing and real-time analysis will help make AI tools more competent and more responsive to the complexities of user-generated materials.

Answering whether AI will ultimately replace humans in future content moderation, we can genuinely say that it is unlikely in the foreseeable future. It is more probable to see a collaborative approach, with AI assisting and augmenting moderators to enhance the efficiency of the more complex operations. Yet, no one can predict what the very distant future will bring.

Conclusions: What is Worth Remembering?

Leveraging AI in content moderation undeniably brings significant benefits, empowering various processes, enhancing productivity, and ensuring reliability in content management and user protection. However, its implementation should be approached with wisdom, considering each platform’s unique requirements, nuances, industry, and audience characteristics. It involves splitting responsibility, with technology handling repetitive tasks and human intelligence taking charge of more comprehensive decision-making. It all must be done with exceptional care for ethical considerations, data privacy, and adherence to evolving legal standards.