- 1

- 2

- 3

- 4

- 5

- 6

- 7

Introduction

The rise of digital services and social media platforms has created vast opportunities for unprecedented connectivity, content sharing, and entertainment, constantly attracting individuals of all ages and backgrounds. But these exciting benefits also come with challenges like privacy concerns, misinformation, and potential conflicts. Initiatives such as content moderation and censorship are essential for navigating this landscape, ensuring safety and fostering responsible engagement. Nevertheless, the advantages of digital interactions must be carefully balanced with potential drawbacks, especially when security and free speech are in tension.

Moderation and censorship play crucial roles in nurturing positive and healthy cyber societies, each in its own way. What differentiates them most is that content oversight typically involves managing digital information in a nuanced and adaptable manner, considering various factors such as context and community standards. In contrast, censorship usually entails more rigid restrictions on specific types of speech without considering contextual nuances. Let’s delve deeper into the complex world of digital content oversight and prevention to understand the intricacies, secrets, and challenges.

Why People Confuse Content Moderation with Censorship

Sometimes, the concepts of moderation and censorship are confusing, complex to distinguish, and controversial, as the line between them can be blurry, making it challenging to determine where one ends and the other begins. This can be additionally enforced by the lack of transparent justification, accessible communication and clear guidelines or standardised practices across platforms, leading to inconsistencies in how content is moderated or censored. Moreover, the subjective nature of determining what constitutes harmful or objectionable content can vary greatly depending on individual interpretations and societal norms.

In such a case, legitimate and unbiased moderation is a solution, complemented by constructive discussions that lead to more informed and equitable decisions, protecting virtual entities against private censorship practices. Yet, even in certain circumstances, achieving consensus can be difficult, especially considering the audience with strong convictions on both ends of the spectrum, for instance, contentious political or social issues where opinions are deeply divided. The last resort is then delicately weighing the imperative of safeguarding user experience against the necessity of mitigating feasible damage when the equilibrium is not uniformly balanced but is greater at some points than others.

Consider a blogging platform where users can publish articles on various topics. However, the digital service has guidelines prohibiting explicit content and promoting respectful discourse, reflecting its conservative stance on content oversight. One day, a user submits an article discussing controversial political viewpoints that some readers could find offensive, and the moderation team decides not to publish it due to inflammatory language. The individual accuses the platform of censorship. From the platform’s perspective, rejecting the article is a matter of content moderation, precisely presented in the platform policy.

Understanding the Difference: Content Moderation and Censorship

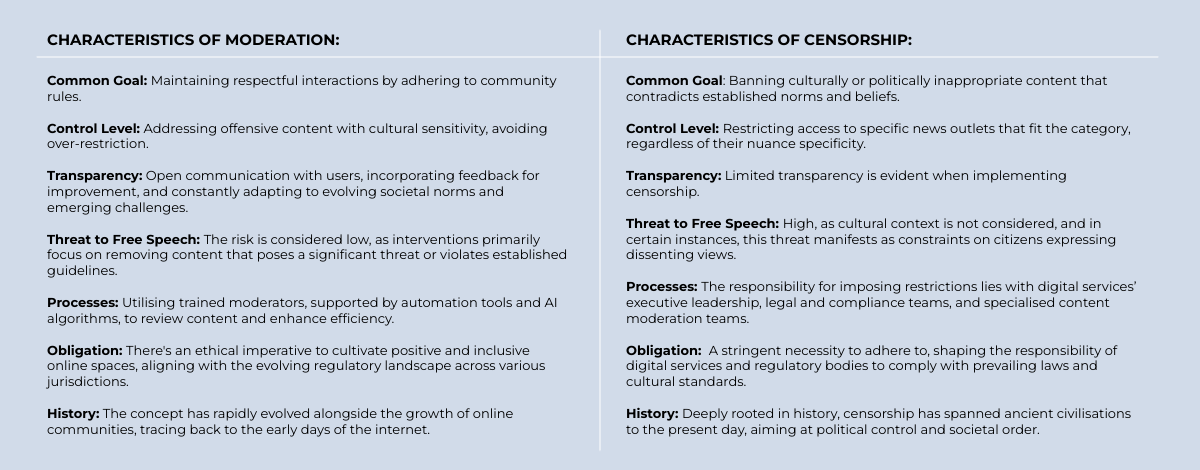

In one perspective, digital moderation and online censorship are two distinct concepts that serve different purposes. While content oversight helps protect users from exposure to harmful or offensive materials, censorship aims to prevent certain types of content from reaching the public, referred to as irrelevant due to regulations, laws, or the enforcement of societal norms. On the other hand, these special initiatives have much in common: their involvement in watching over shared information, with some level of intervention based on diverse directions and requirements. Below is a summary highlighting the distinctive features of the two:

1. Content Moderation – a Guardian of Online Well-being

Moderation is the multifaceted process of reviewing and managing user-generated content to ensure compliance with established community guidelines and policies, as well as ethical and legal standards. Its primary objective is to improve user safety by identifying and removing inappropriate, unfair and offensive information, promoting hate speech, violence, harassment, or providing misinformation. This includes content shared within online platforms, like text, images, videos, or various multimedia types. It is essential to highlight that this kind of oversight is limited to preventing or eliminating materials that significantly break internal rules, infringe upon privacy, or potentially lead to cybercrime or harm individuals. At the same time, appropriate actions are only taken when necessary. Forms of content moderation may encompass:

- Manual Review: Human moderators assess and evaluate content flagged by users or algorithms for potential violations of platform policies.

- Automated Filtering: Various algorithms and automated tools detect and filter out inappropriate or harmful information, such as spam, hate speech, or graphic material.

- Community Reporting: Users report content they believe violates community guidelines, which moderators review for appropriate action.

- Age Restrictions: Platforms implement age restrictions to limit access to inappropriate content for specific age groups.

- Content Labelling: Data, including text and files, is labelled or categorised based on its nature, allowing users to make informed decisions about their views.

- Pre-Moderation: Content is checked and approved before being available on a platform.

- Post-Moderation: Content is published first and then analysed by moderators, who act if it violates platform policies.

- Hybrid Moderation: This approach combines manual review with automated filtering. It involves using automated tools to identify potentially problematic content, which human moderators then review for further evaluation and action.

Moderation Example Based on the Facebook Use Case:

Facebook, a global social media leader, effectively moderates content generated by its users by swiftly identifying and removing information that violates guidelines. While AI algorithms handle more routine issues, human review teams across the globe manage more intricate cases, combining the strength of AI efficiency and the nuanced decision-making of humans. This enhances positive user experiences and engagement, closely tied to moderators’ skills and knowledge to prevent oversights. (Source & inspiration: Facebook)

2. Digital Censorship – Navigating the Boundaries of Online Expression

This intricate practice involves regulating and blocking information, ideas, or creative expressions in the digital realm, aiming to maintain essential standards, uphold morality, ensure national security or eliminate brutality or discrimination from digital media. Censorship is typically orchestrated by regional governing bodies, local authorities, or various influential organisations, guiding the direction for all individuals and communication channels within a given territory. This often leads to the suppression or restriction of specific types of information and typically depends on the political situation, prevailing ideologies, societal norms, and the perceived interests of those in power. The most common forms of censorship are:

- Legal Restrictions: Governments may pass laws or regulations prohibiting certain types of speech, expression, or content deemed objectionable or harmful.

- Media Control: Authorities may control media outlets, including newspapers, television, radio, and online platforms, by censoring or manipulating content to align with government agendas or ideologies.

- Internet Censorship: Governments or organisations may employ technical measures to block access to certain websites, platforms, or online content deemed inappropriate or threatening to national security or social order.

- Self-Censorship: Individuals or some entities may voluntarily refrain from expressing certain ideas or viewpoints due to fear of reprisal, social stigma, or other consequences.

Censorship Example Based on the Hypothetical Scenario

In a fictional country, the government enforces strict censorship measures targeting online content associated with extremist ideologies and terrorism. Internet service providers are mandated to block websites, social media accounts, and forums that share extremist propaganda carried out by their internal resources. The undertaking helps prevent the dissemination of extremist ideologies and terrorist propaganda, contributing to national security by thwarting potential threats. Nevertheless, such strict censorship can limit citizens’ access to legitimate information that could help them better understand and critically evaluate the situation.

3. A Snapshot Comparison of the Two

Below is a detailed comparison outlining the distinct characteristics of content moderation and censorship, shedding light on their goals, methods, and historical contexts. The analysis also provides insights into the potential implications of each approach on freedom of speech, user experience, and the broader online ecosystem.

Preventing Users from Feeling Silenced: Effective Strategies to Achieve This

It is essential to recognise that, in some instances, misunderstandings can occur between users and moderators regarding the intent behind specific content. Individuals or organisations may need to provide context or explain their intentions behind posting certain materials that may initially appear to be inappropriate. Online services should be open to these discussions, valuing the opportunity to better understand the user’s perspective. Actively listening to the audience and engaging in meaningful dialogue, platforms demonstrate a commitment to enforcing guidelines and respecting the voices and intentions of their community members, preventing them from being silent.

Here are collected key effective strategies to foster open communication while addressing concerns related to content moderation:

- Transparent Guidelines: Communicate community guidelines and content policies. Ensure guidelines are easily accessible and understandable for all users.

- Educational Initiatives: Implement educational campaigns on acceptable online behaviour. Provide resources to help users understand the reasons behind moderation actions.

- Clear Communication: Provide clear explanations for content removal or moderation decisions. Use plain language to enhance understanding and reduce confusion.

- Appeal Mechanisms: Establish a straightforward process for users to appeal moderation decisions. Ensure timely and respectful responses to appeals, acknowledging user concerns.

- Community Engagement: Foster a sense of community through forums, discussions, and feedback mechanisms. Encourage users to express their opinions on content policies and moderation practices.

- Moderator Transparency: Encourage moderators to engage with the community transparently. Communicate content moderation challenges and the importance of maintaining a positive online environment.

- Consistent Enforcement: Apply content policies consistently to all users, regardless of their prominence. Avoid biased or selective enforcement of rules.

- Regular Policy Reviews: Conduct regular reviews of content policies to adapt to evolving online dynamics. Adjust policies based on user feedback and emerging challenges.

- Cultural Sensitivity: Consider cultural nuances and diverse perspectives when moderating content. Consult with users from different backgrounds to ensure inclusivity. Combine human moderators with AI technologies to enhance content review, enabling context comprehension and empathy.

- Encourage Positive Contributions: Promote positive content and contributions within the community. Recognise and highlight valuable user contributions to reinforce positive behaviour.

- Open Door for Dialogue: Establish channels for open dialogue between users and platform representatives. They could encompass support tickets, feedback forums, or live Q&A sessions to make communication smoother. Actively listen to user concerns and demonstrate a commitment to addressing issues.

Working towards a consensus is another crucial aspect of the dialogue. When users explain their intentions and platform representatives take the time to understand and evaluate the content within its context, there’s an opportunity to find common ground. This may result in the amendment of the content to meet community standards while still allowing the user to express their viewpoints, or it might lead to a more informed enforcement of rules. This approach not only mitigates feelings of being silenced or misunderstood.

The Role of Content Moderation in a Global Context

In a global context, content moderation plays a crucial role in shaping online interactions and maintaining the integrity of digital spaces, especially as there is a growing trend of harmful and violent materials circulating across various online platforms. Moderation helps enforce community guidelines, prevents disseminating harmful or inappropriate information, and promotes respectful discourse across diverse cultures and languages. By monitoring user-generated content and addressing violations promptly, the initiative helps mitigate the spread of misinformation, hate speech, and other harmful content that can negatively impact individuals and communities worldwide. Moreover, effective oversight fosters trust and safety among users in an interconnected digital landscape, encouraging continued engagement and participation in online platforms. Additionally, it is pivotal in upholding legal and regulatory standards and ensuring compliance with regional laws and cultural sensitivities across different countries and jurisdictions. Moderation is a cornerstone of responsible digital stewardship, fostering inclusive and secure online environments conducive to global communication and collaboration.

To illustrate the severity of the issue, in the second quarter of 2023, Facebook removed 18 million pieces of hate speech, along with 1.8 million pieces of violent content and 1.5 million pieces of bullying and harassment-related content. (Source: Statista)

What to Consider When Outsourcing Content Moderation

Outsourcing is a smart move for digital businesses, especially if they need more resources or know-how for content moderation in-house. It offers cost savings, flexibility, and scalability, allowing companies to adjust services based on their needs while keeping online spaces secure and user-friendly. However, finding the right BPO partner is crucial. Such an organisation should understand digital content challenges, provide industry-specific expertise, offer multilingual support, and use advanced technology to manage risks. Success hinges on choosing a provider that tailors services to the company’s needs, considering factors like the audience, location, languages, and regulations. An ideal outsourcing partner needs to go beyond just doing tasks. It must share the company’s goal of creating a safe online environment without unnecessary restrictions.

Conclusion

Understanding the nuanced differences between content moderation and censorship is crucial in the ongoing discourse on free speech, particularly amid concerns that major social media platforms may infringe upon this freedom through fact-checking and removing misinformation. Moderation, when properly executed, serves as a vital mechanism to ensure the safety and integrity of online communities, acting as a critical determinant of user loyalty, brand perception, and online service success. However, digital media must emphasise open dialogue, adaptability to changing trends, norms, and societal needs, and the ability to recognise context and nuances to avoid the conflation of supervision with censorship. This approach ensures that moderation practices are not perceived as censorial but as necessary steps to maintain a constructive and respectful online environment.