- 1

- 2

- 3

- 4

- 5

- 6

- 7

Introduction

Hate speech is considered a prominent and worrying form of unethical behaviour that increasingly spreads through digital space, promoting discrimination, fueling violence, and harming the well-being and mental health of individuals or entire communities. The threat has become a fundamental problem in recent years, posing significant challenges to primarily social media and related services to elevate virtual safety and maintain a secure and inclusive environment for everyone, regardless of users’ backgrounds, identities, or beliefs. The alarming abundance of hate speech globally casts a shadow on the positive aspects of modern connectivity, going against moral principles and threatening the stability and reliability of digital platforms. Addressing this issue requires concerted efforts, including robust content moderation, advanced technologies for detection, and fostering a digital culture that values respectful and responsible engagement. Effectively managing these elements is necessary to protect a brand’s reputation, boost popularity, keep customer loyalty, and secure future revenue streams.

Prevalence of Hate Speech in Online Platforms

In the wake of increasing digitalisation touching various aspects of our lives, many online services are spectacularly expanding and attracting new users who seek to be a part of this exciting society, be informed, and share thoughts and emotions with others. In this case, social media has naturally emerged as a central hub for interaction, breaking through geographical, cultural and communication barriers. Their strength lies in enabling users to be noticed, listened to, and appreciated in a manner often less inhibited by fear and shame than traditional offline dialogue, serving as a powerful tool for self-expression and global outreach. Unfortunately, diverse individuals or organisations have joined the game beyond the socialisers, engaging in unfair practices for personal gain, ideological motives, or malicious intent. All in all, incidents of hate speech related to social media are reported globally, affecting nearly every continent, various societies, and demographics.

Below are a few selected statistics from the Anti-Defamation League (ADL) study titled “Online Hate and Harassment: The American Experience 2023”. According to this document, online threats remain persistent and deep-rooted problems on social media platforms, as presented:

The Complexity in Defining Hate Speech

Here, it is worth acknowledging the crucial role and sophisticated shape of hate speech in the risk landscape to understand better how this painful phenomenon has transformed, bringing new challenges, complexities, and dimensions. Over time, the threat has evolved from historical tools of marginalisation and discrimination, adapting to new forms with the rise of communication technologies.

It is usually subjective and context-dependent in the contemporary era, making it difficult to create universal definitions encompassing all instances. In the digital space, hate speech typically involves inappropriate language or expressions, manifesting in various forms of communication and behaviour, including actions, symbols, images, and gestures that can be pervasive across social media posts, comments, articles, movies, or messages. It often targets subjects depending on prevailing societal issues and cultural aspects, encompassing race, ethnicity, religion, gender, sexual orientation, nationality, disability, political affiliation, social identity, immigration status, and health status.

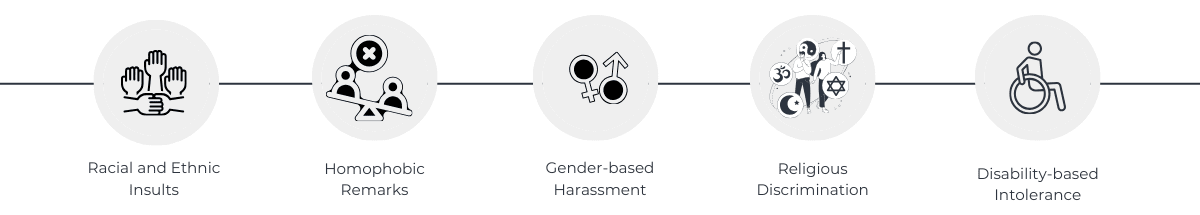

Below are some selected examples illustrating the diverse forms hate speech can take and showcasing the harmful impact it can have:

- Racial and Ethnic Insults: Posting derogatory comments or memes targeting specific racial or ethnic groups.

- Homophobic Remarks: Sharing content containing offensive language or jokes directed at the LGBTQ+ community.

- Gender-based Harassment: Engaging in online behaviour that discriminates against individuals based on their gender.

- Religious Discrimination: Posting content that ridicules or insults people based on their beliefs or practices.

- Disability-based Intolerance: Sharing memes or comments that mock individuals with disabilities.

In addition, it must be emphasised that online hate speech is especially harmful to children and teenagers who have grown up with the advancement of social media and digital communication. Exposure to the threat can significantly impact their mental health, self-esteem, and overall wellness, posing significant social, psychological, and emotional challenges. It can also sometimes culminate in more severe outcomes such as breakdown or suicide attempts in the worst-case scenario. This is due to the minors’ stage of development, susceptibility to peer influence, the quest for social validation, and the potential lack of digital literacy skills.

Content Moderation: A Need to Go Beyond the Standard

Referring to another ADL report, “Block/Filter/Notify: Support for Targets of Online Hate Report Card” from mid-2023, popular social media services are not yet effectively supporting individuals who are targets of online hate and harassment, despite their stated intentions to ensure user protection. The gap between the aspirations and the actual actions highlights the urgency of implementing more advanced content moderation strategies to address the persistent challenges of online hate. This involves adopting robust and cutting-edge solutions beyond basic security measures, enabling better threat detection, efficient prevention, nuanced understanding, and proactive intervention.

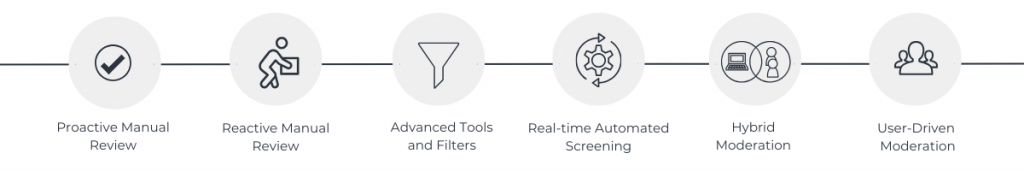

In social media, content moderation is the gatekeeper, diligently helping to monitor, evaluate, and steer user-generated content towards compliance with community guidelines, legal frameworks, and ethical standards. This pivotal process encompasses identifying and removing information and materials that run afoul of established norms, and hate speech is a relevant example of its violation. The responsibility of such an undertaking can be shouldered either by human moderators through manual review or by leveraging automated tools fueled by artificial intelligence and machine learning. The goal is maintaining a secure and inclusive digital space, carefully balancing free expression and platform policies. Among the most popular moderation techniques are:

- Proactive Manual Review: In this case, content is reviewed by humans before publication, preventing the appearance of harmful materials.

- Reactive Manual Review: Measures are taken after publication when content undergoes manual review supported by real-time tools, user reporting, and community guidelines.

- Advanced Tools and Filters: This involves detecting and handling specific content using advanced tools and filters.

- Real-time Automated Screening: The approach entails automated screening with human involvement to address complex issues, offering flexibility for diverse content analysis.

- Hybrid Moderation: This approach combines various methods to ensure the highest efficiency, accuracy, and adaptability.

- User-Driven Moderation: Considering this, visitors or communities are engaged to participate by actively reporting or flagging inappropriate content. Rating and voting systems are also employed to elevate highly rated content and conceal or remove low-rated material.

However, it is vital to underline that efficient content moderation must also align with the brand’s values, platform characteristics, and user profile to maximise the outcomes. The same content can have different meanings on diverse platforms based on context and guideline specificity. The specificity must be considered highly and critically, necessitating the need to tailor moderation approaches carefully and strategically.

Technological Challenges in Automatic Detection

Addressing technological challenges involves ongoing research, development, and collaboration between experts in artificial intelligence, machine learning, and content moderation. It requires refining algorithms, understanding cultural nuances, and staying vigilant against emerging threats to create a hate-speech-free environment.

AI plays a crucial role in this effort by automating processes, improving scalability, and providing real-time monitoring capabilities beyond human capacity. It excels at handling large volumes of content, making well-informed routine decisions, managing repetitive tasks swiftly, and accurately identifying and blocking inappropriate content, leading to timesaving and the risk of oversight reduction. This type of moderation relies on machine learning models trained on platform-specific data to recognise undesirable materials quickly and precisely. However, the effectiveness of AI depends on the availability of high-quality datasets for proper model training.

Therefore, allocating human agents in many situations where AI cannot address issues efficiently is also invaluable. Moderators bring empathy, sensitivity, a deep understanding of various details, and the ability to interpret highly delicate matters. They can handle content that needs to conform to predefined rules neatly. Despite being time-consuming, resource-intensive, and susceptible to human error, human moderation remains essential for addressing complex and context-specific content.

Additionally, using sentiment analysis helps gauge the emotional tone of user-generated content. Positive sentiment may indicate constructive discussions, while negative sentiment may signal potential issues or harmful content. This analysis is especially helpful in prioritising and addressing content that poses a higher risk, allowing platforms to manage and mitigate potential concerns swiftly and effectively proactively. Keyword strategy can also help establish a targeted approach to identify and handle specific terms associated with hate speech or inappropriate content. Through a well-defined set of keywords, platforms can efficiently and proactively detect and act upon content that violates guidelines.

Ethical and Societal Considerations

Ethical and societal considerations regarding hate speech mitigation in social media encompass a range of principles and concerns that focus on the impact, responsibilities, and values associated with online content. Some key issues include ethical, legal, cultural, and societal dimensions, for example:

-

- Freedom of Speech vs. Harm Avoidance: Balancing the right to freedom of speech with the need to prevent harm and protect individuals or groups from hate speech is paramount. Social media constantly grapples with the challenge of fostering open dialogue while mitigating the potential adverse effects of harmful content. Therefore, it is imperative to establish transparent, ethical guidelines and policies that carefully delineate the boundary between free expression and harm prevention. Indicating what is deemed acceptable and what is forbidden, these rules should be easily accessible to the audience, ensuring a shared understanding and adherence to community standards.

- Cultural Sensitivity: It is pivotal to recognise and respect norms, values, traditions, language nuances, varied comic sensibilities, beliefs, and societal settings when moderating content. Such activity is necessary to avoid cultural insensitivity, misunderstanding, or offence. It can be supported by engaging skilled and knowledgeable human agents who understand subtle nuances that automated systems might overlook or misinterpret, potentially leading to inappropriate content being allowed or deleted unnecessarily.

- Talent Management & Well-being: Successful moderation also relies on a skilled and resilient workforce characterised by language proficiency, emotional intelligence, cultural awareness, and the ability to handle pressure. This underscores the importance of talent management practices that prioritise the mental health and professional development of each employee, especially given their daily exposure to hate speech and potentially distressing content.

- Legal Compliance: Adhering to relevant laws and regulations about hate speech and discrimination in different jurisdictions is key for social media. This is because numerous new regulations have been introduced across regions in recent years, shaping the digital landscape and introducing new responsibilities to the providers, for instance:

Summary

As indicated above, it is undeniable that hate speech is a concerning issue in social media, demanding the implementation of groundbreaking content moderation measures, balancing human expertise and technology, growing a customised approach, and swift action to curb its impact. However, a collective responsibility extends beyond online platforms, burdening legislators, non-profit organisations, parents, educators, and society to promote a safer digital environment in the range and scope of their influence, advocacy, and educational initiatives.